Abstract

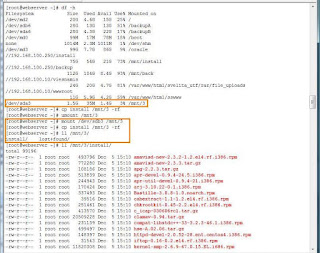

Its getting harder and harder to install good old 10g on newer Centos versions. Last attempt on 6.5 was semi-problematic, with some extra packages missing, 6.7 deployment was even more challenging.

System specifications

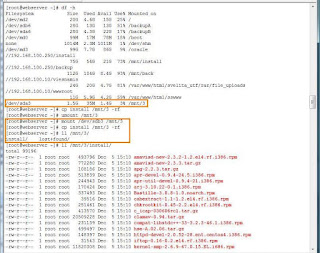

Centos version (/etc/issue): CentOS release 6.7 (Final)

Oracle version: Version 10.2.0.1.0 Production (10201_database_linux_x86_64.cpio)

Problems and solutions

#1: Error invoking target 'ntcontab.o' of makefile

This error occurred around 65% in installation progress, aborting is not an option - more errors will follow and in the end whole process fails. Did some testing and it appears that one process is building the file, next one is instantly deleting it:

# cp /misc/oracle/product/10.2.0/db_1/lib32/ntcontab.o /misc/oracle/product/10.2.0/db_1/lib/

But in the end it looks like it was one of the following (where not needed in 6.5):

# yum install libaio-devel.i686 -y

# yum install zlib-devel.i686 -y

# yum install glibc-devel -y

# yum install glibc-devel.i686 -y

# yum install libaio-devel -y

# yum install ksh -y

# yum install glibc-headers

#2: /misc/oracle/database/product/10.2.0/db_1/sysman/lib/snmccolm.o: could not read symbols: File in wrong format

It might be that it may be solved with some more x86 packages thrown into the pile, I was not able to find the exact culprit. Many of the sources online simply telling to ignore this and fix it with 10.2.0.4 patch. Thats what we'll do: Ignore.

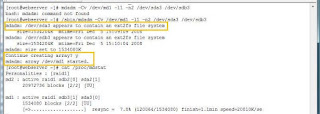

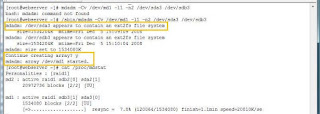

#3: ORA-27125: unable to create shared memory segment

Reason is unknown, it was thrown by DBCA with continuous installation process.

The solution is very simple, first check the oracle user group information:

[oracle@storage] $ id oracle

uid = 500 (oracle) gid = 502 (oinstall) groups = 502 (oinstall), 501 (dba)

[oracle@storage] $ more /proc/sys/vm/hugetlb_shm_group

0

Execute the following command as root, the dba group is added to the system kernel:

[oracle@storage] $ echo 501 > /proc/sys/vm/hugetlb_shm_group

Continue with DBCA, step will fail, but then retry DBCA, the problem disappeared and database was created.

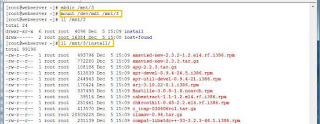

#4: bonus problem, not Centos 6.7 related: You do not have enough free disk space to create the database

My bad was that I had 12TB storage mounted, looks like I missed the 10g storage requirement: 400MB, but less then 2TB. Found no other solution then to unmount /dev/sdb1, shrink it with gparted to get ~500GB space, ext4 it and mount the new device /dev/sdb2 to another mount point /oracle.

Conclusion

Move to 11i or 12c, its about time.

Showing posts with label Linux. Show all posts

Showing posts with label Linux. Show all posts

Thursday, October 29, 2015

Friday, May 15, 2015

PDF page count on Linux commandline

# pdfinfo yourpdffilename.pdf | grep Pages | awk -F: '{print $2}' | tr -d '[:blank:]'

pdfinfo on Centos is provided by package poppler-utils

pdfinfo on Centos is provided by package poppler-utils

Wednesday, April 22, 2015

Shell: loop through multiple files and pass them to the script

Short intro

Script file ./main.sh - bash script, doing the htm/html file processing. HTML - is a folder name. Problem: pass all the htm files to the script file as parameters for processing.

Passing files as parameters

# cd HTML

# ll

-rwxr-xr-x 1 oracle root 25828 Apr 13 15:03 filter

-rwxr-xr-x 1 oracle root 9955 Apr 13 15:03 filter.c

-rw-r--r-- 1 oracle root 2142 Apr 22 08:37 loader.ctl

-rw-r--r-- 1 oracle root 9792 Apr 22 08:46 loader.log

-rwxr-xr-x 1 oracle root 2648 Apr 22 10:04 main.sh

-rw-r--r-- 1 root root 781 Apr 22 09:49 10L31JKYRF5UH4.htm

-rw-r--r-- 1 root root 641 Apr 22 09:49 10L31JUKER1WP1.htm

-rw-r--r-- 1 root root 904 Apr 22 09:49 10L31JULIT5LI3.htm

-rw-r--r-- 1 root root 858 Apr 22 09:49 10L31JUOER5GT3.htm

-rw-r--r-- 1 root root 683 Apr 22 09:49 10L31JUPEM9TH9.htm

........................

# for FILE in *.htm; do ./main.sh $FILE; done;

Script file ./main.sh - bash script, doing the htm/html file processing. HTML - is a folder name. Problem: pass all the htm files to the script file as parameters for processing.

Passing files as parameters

# cd HTML

# ll

-rwxr-xr-x 1 oracle root 25828 Apr 13 15:03 filter

-rwxr-xr-x 1 oracle root 9955 Apr 13 15:03 filter.c

-rw-r--r-- 1 oracle root 2142 Apr 22 08:37 loader.ctl

-rw-r--r-- 1 oracle root 9792 Apr 22 08:46 loader.log

-rwxr-xr-x 1 oracle root 2648 Apr 22 10:04 main.sh

-rw-r--r-- 1 root root 781 Apr 22 09:49 10L31JKYRF5UH4.htm

-rw-r--r-- 1 root root 641 Apr 22 09:49 10L31JUKER1WP1.htm

-rw-r--r-- 1 root root 904 Apr 22 09:49 10L31JULIT5LI3.htm

-rw-r--r-- 1 root root 858 Apr 22 09:49 10L31JUOER5GT3.htm

-rw-r--r-- 1 root root 683 Apr 22 09:49 10L31JUPEM9TH9.htm

........................

# for FILE in *.htm; do ./main.sh $FILE; done;

Monday, April 20, 2015

SQL Loader: multiple tables, multiple problems

Short intro

Continuous development of Ajax Crawler importer led to data import problems. Oracle SQL Loader was used in all previous versions of my crawler, just this time its a multi-table structure in both: datafile and database. Spent almost 2 weeks on the simple subject and after someone pointed out the solution I was not able to find more then 2 references online, so hopefully its the third one for you: "position(1)" - you must reset the loader if you are importing into multiple tables even though this directive looks like a fixed length argument. Table and datafile examples are shortened, just to give you the explanation of control file.

Tables

Im importing invoices into three tables: inv_invoices_imp, inv_invoice_lines_imp, inv_invoice_comments_imp. Inv_invoices_imp contains invoice header, inv_invoice_lines_imp contains accounting and invoice line data, inv_invoice_comments_imp - user comments.

Datafile

Datafile is a HTML file, containing 6 different tables/blocks: some text, header table, some text, lines table, comments table, some text. One datafile contains one invoice data.

SQL Loader config

Oracle SQL Loader can read, parse and load almost any type of data. Any separation, fixed or delimiter separated, single or multiple sources and destinations. For out case we got multistructured datafile and three different destination tables. Options used: truncate table, skip rows, conditional rows, fillers, sequences, foreign keys. If you got stuck with SQL Loader loading only empty lines and having no errors in log files here is a checklist: column names, data types, missed separators, encoding. Only full list I was able to find is here, except the position(1) part.

Full loader.ctl file

OPTIONS (SKIP=1)

LOAD DATA

CHARACTERSET UTF8

INTO TABLE inv_invoices_imp

TRUNCATE

--APPEND

WHEN (1:1) = 'H'

FIELDS TERMINATED BY ';' OPTIONALLY ENCLOSED BY '"' trailing nullcols

(dummy0 FILLER, VENDOR_NUM, ACCOUNT_NUM, VENDOR_NAME, VENDOR_ORG, INVOICE_NUM, INVOICE_DATE "to_date(:INVOICE_DATE,'MM/DD/YYYY')",

DUE_DATE "to_date(:DUE_DATE,'MM/DD/YYYY')", VALUTA, AMOUNT "to_number(:AMOUNT,'99999999999.9999')", VALUTA_EX "to_number(:VALUTA_EX,'99999999999.9999')",

AMOUNT_NOK "to_number(:AMOUNT_NOK,'99999999999.9999')", KID, BILAGSNR, dummy1 FILLER, dummy2 FILLER, TAX "to_number(:TAX,'99999999999.9999')",

dummy3 FILLER, dummy4 FILLER, dummy5 FILLER, dummy6 FILLER, dummy7 FILLER, dummy8 FILLER, dummy9 FILLER, DERESREF, dummy10 FILLER,

dummy11 FILLER, dummy12 FILLER, INVOICE_ID EXPRESSION "INV_INVOICES_IMP_SEQ.nextval"

)

INTO TABLE inv_invoice_lines_imp

TRUNCATE

WHEN (1:1) = 'L'

FIELDS TERMINATED BY ';' OPTIONALLY ENCLOSED BY '"' trailing nullcols

(dummy0 FILLER POSITION(1), S1, S1_NAME, S2, S3, BELOP "to_number(:BELOP, '999999999999.9999')", DESCRIPTION, VAT_ID,

VAT_AMOUNT "to_number(:VAT_AMOUNT, '999999999999.9999')", BELOP_NOK "to_number(:BELOP_NOK, '999999999999.9999')",

S4, S5, S6, S7, dummy1 FILLER, FAKTURAID EXPRESSION "INV_INVOICES_IMP_SEQ.currval", ID EXPRESSION "INV_INVOICE_LINES_IMP_SEQ.nextval"

)

INTO TABLE inv_invoice_comments_imp

TRUNCATE

WHEN (1:1) = 'C'

FIELDS TERMINATED BY ';' OPTIONALLY ENCLOSED BY '"' trailing nullcols

(dummy FILLER POSITION(1), CUSER, ACTION, CDATE, DESCR, FAKTURAID EXPRESSION "INV_INVOICES_IMP_SEQ.currval",

ID EXPRESSION "INV_INVOICE_COMMENTS_IMP_SEQ.nextval"

)

Previous problems and config explanation

UTF8 - charset spec, I suggest you use it even though your file and database are unicode.

FILLER - useful argument, column name going with it may not exist in database table, for the same table - there cant be duplicates, so use them like dummy1, dummy2, etc. If you dont know it yet - here you specify order of your data in a datafile using destination table columns. use FILLER on the data columns that you want to skip.

to_date, to_number - a must use if your destination column is numeric or date, suggest importing them all as VARCHAR2 at first, then converting to desired datatype and checking them one by one.

EXPRESSION.NEXTVAL - will mention this one, you dont have to have this one in datafile, but its essential for creating foreign key relation with other related tables.

POSITION(1) - hopefully its the directive you are here for. Its used twice in two related tables and placed after the first column in setup. When loading into more than one table, the position has to be reset for each table after the first one, using POSITION(1) with the first field, even though it looks like fixed length directive. If you miss this directive you will end up with nice empty table lines with sequences and foreign keys, no errors in log file. With some luck you might see "all fields were null" message - but you must be very lucky - usually because of some other related error.

EXPRESSION.CURRVAL - not much magical, but here is how you establish relation with your parent table. Hopefully your data complexity is similar. I'm also using child tables ID sequence in control file just to show you the full view. Child ID generation is only needed if you use conventional data load path.

Sample datafile (chopped)

<...>

H;Leverandørnr;Bankkontonr;Leverandørnavn;Organisasjonsnr;Fakturanr;Fakturadato;Forfallsdato;Valuta;Fakturabeløp;Valutakurs;FakturabeløpNOK;KID;Bilagsnr;Scannebatch;Duplikat;Mvabeløp;Nettobeløp;Fakturatype;Val.dok;Selskapskode;Selskap;Refusjon postnr sted;refusjon Land;Deres Ref;Refusjon navn;Refusjon adresse;

H;40013;62190581506;TUR-RETUR AS - NO 870 989 587;870989587;105358;2/19/2015;3/1/2015;NOK;5064;1;5064;103071053583;

80746991;;N;403;4661;1;;FT;GatoFly AS;;;;;;

L;Konto;Kontonavn;Avdeling;Prosjekt;Beløp;Bilagstekst;MVA-kode;MVA beløp;Beløp NOK;Anlegg;Produkt;Salgssted;Kanal;Sats

L;7135;Reisekostnader;4500;1400;220;Nye FT. opphold H.Hernes 26-28.2/1-3.3;0;0;220;;;;;0

L;7135;Reisekostnader;4500;1400;4844;Nye FT. opphold H.Hernes 26-28.2/1-3.3;1D;358.81;4844;;;;;8

L;Fakturahistorikk

C;Bruker;Handling;Dato;Kommentarer

C;BTIP Connector ;Lagret av BTIPC ;2/24/2015 11:12:12 AM ;E-invoice saved by BTIPC

C;brigde ;Kommentar lagt til ;2/24/2015 11:28:04 AM ;Autosirk- referanse blank

C;brigde ;Grunnlagsdata endret ;2/24/2015 11:28:04 AM ;fakturatype-1

C;BTHANDLER ;Kommentar lagt til ;2/24/2015 11:28:04 AM ;matchSupplierAccount. match på konto.40013

C;BTHANDLER ;Kommentar lagt til ;2/24/2015 11:28:04 AM ;Endret flytstatus

C;BTHANDLER ;Kommentar lagt til ;2/24/2015 11:28:04 AM ;setCompName OK.

<...>

As you can see data is semicolon separated, first column is destination identifier, date and number separators are visible as well. Data has some crap text lines, but does not matter now. No external ID's or references are used. Invoice line import lines ("L") can be anywhere in the file, does not matter that now they are between header and comments. First column and some others are marked as FILLER in control file.

HTML to datafile

Will reveal some more cards for you. Data file was an old HTML file, it was missing end-tags, using a couple of self aspired tags, data formatting was also not very handy. Here is full source of my bash script used to prepare the file for reading.

#!/bin/sh

file="$1"

echo processing $file

echo converting to unicode

cat $file | iconv -f utf-16 -t utf-8 > "$file".out

echo done

echo HTML cleanup

less "$file".out | tr ',' '.' | sed 's/ //g' | sed 's/\cM//g' | sed 's/\cW//g' | sed 's/<\/TR>/<\/TR> /g' | sed 's| sed 's/ / \n/g' | sed ':a;N;$!ba;s|\n

sed 's/ / \n/g' > "$file".clean

echo cleanup complete

echo header and lines separation

./filter -t 2 -c 2 -f "$file".clean > "$file".tmp

./filter -t 3 -f "$file".clean > "$file".lines

./filter -t 4 -f "$file".clean > "$file".comments

echo done separating

echo transposing headers

cols=2; for((i=1;i<=$cols;i++)); do awk -F ";" 'BEGIN{ORS=";";} {print $'$i'}' "$file".tmp | tr '\n' ' '; echo; done > "$file".header

echo transposed

echo cleanup

rm "$file".out -rf

rm "$file".clean -rf

rm "$file".tmp -rf

echo cleaned up

Continuous development of Ajax Crawler importer led to data import problems. Oracle SQL Loader was used in all previous versions of my crawler, just this time its a multi-table structure in both: datafile and database. Spent almost 2 weeks on the simple subject and after someone pointed out the solution I was not able to find more then 2 references online, so hopefully its the third one for you: "position(1)" - you must reset the loader if you are importing into multiple tables even though this directive looks like a fixed length argument. Table and datafile examples are shortened, just to give you the explanation of control file.

Tables

Im importing invoices into three tables: inv_invoices_imp, inv_invoice_lines_imp, inv_invoice_comments_imp. Inv_invoices_imp contains invoice header, inv_invoice_lines_imp contains accounting and invoice line data, inv_invoice_comments_imp - user comments.

Datafile

Datafile is a HTML file, containing 6 different tables/blocks: some text, header table, some text, lines table, comments table, some text. One datafile contains one invoice data.

SQL Loader config

Oracle SQL Loader can read, parse and load almost any type of data. Any separation, fixed or delimiter separated, single or multiple sources and destinations. For out case we got multistructured datafile and three different destination tables. Options used: truncate table, skip rows, conditional rows, fillers, sequences, foreign keys. If you got stuck with SQL Loader loading only empty lines and having no errors in log files here is a checklist: column names, data types, missed separators, encoding. Only full list I was able to find is here, except the position(1) part.

Full loader.ctl file

OPTIONS (SKIP=1)

LOAD DATA

CHARACTERSET UTF8

INTO TABLE inv_invoices_imp

TRUNCATE

--APPEND

WHEN (1:1) = 'H'

FIELDS TERMINATED BY ';' OPTIONALLY ENCLOSED BY '"' trailing nullcols

(dummy0 FILLER, VENDOR_NUM, ACCOUNT_NUM, VENDOR_NAME, VENDOR_ORG, INVOICE_NUM, INVOICE_DATE "to_date(:INVOICE_DATE,'MM/DD/YYYY')",

DUE_DATE "to_date(:DUE_DATE,'MM/DD/YYYY')", VALUTA, AMOUNT "to_number(:AMOUNT,'99999999999.9999')", VALUTA_EX "to_number(:VALUTA_EX,'99999999999.9999')",

AMOUNT_NOK "to_number(:AMOUNT_NOK,'99999999999.9999')", KID, BILAGSNR, dummy1 FILLER, dummy2 FILLER, TAX "to_number(:TAX,'99999999999.9999')",

dummy3 FILLER, dummy4 FILLER, dummy5 FILLER, dummy6 FILLER, dummy7 FILLER, dummy8 FILLER, dummy9 FILLER, DERESREF, dummy10 FILLER,

dummy11 FILLER, dummy12 FILLER, INVOICE_ID EXPRESSION "INV_INVOICES_IMP_SEQ.nextval"

)

INTO TABLE inv_invoice_lines_imp

TRUNCATE

WHEN (1:1) = 'L'

FIELDS TERMINATED BY ';' OPTIONALLY ENCLOSED BY '"' trailing nullcols

(dummy0 FILLER POSITION(1), S1, S1_NAME, S2, S3, BELOP "to_number(:BELOP, '999999999999.9999')", DESCRIPTION, VAT_ID,

VAT_AMOUNT "to_number(:VAT_AMOUNT, '999999999999.9999')", BELOP_NOK "to_number(:BELOP_NOK, '999999999999.9999')",

S4, S5, S6, S7, dummy1 FILLER, FAKTURAID EXPRESSION "INV_INVOICES_IMP_SEQ.currval", ID EXPRESSION "INV_INVOICE_LINES_IMP_SEQ.nextval"

)

INTO TABLE inv_invoice_comments_imp

TRUNCATE

WHEN (1:1) = 'C'

FIELDS TERMINATED BY ';' OPTIONALLY ENCLOSED BY '"' trailing nullcols

(dummy FILLER POSITION(1), CUSER, ACTION, CDATE, DESCR, FAKTURAID EXPRESSION "INV_INVOICES_IMP_SEQ.currval",

ID EXPRESSION "INV_INVOICE_COMMENTS_IMP_SEQ.nextval"

)

Previous problems and config explanation

UTF8 - charset spec, I suggest you use it even though your file and database are unicode.

FILLER - useful argument, column name going with it may not exist in database table, for the same table - there cant be duplicates, so use them like dummy1, dummy2, etc. If you dont know it yet - here you specify order of your data in a datafile using destination table columns. use FILLER on the data columns that you want to skip.

to_date, to_number - a must use if your destination column is numeric or date, suggest importing them all as VARCHAR2 at first, then converting to desired datatype and checking them one by one.

EXPRESSION

POSITION(1) - hopefully its the directive you are here for. Its used twice in two related tables and placed after the first column in setup. When loading into more than one table, the position has to be reset for each table after the first one, using POSITION(1) with the first field, even though it looks like fixed length directive. If you miss this directive you will end up with nice empty table lines with sequences and foreign keys, no errors in log file. With some luck you might see "all fields were null" message - but you must be very lucky - usually because of some other related error.

EXPRESSION

Sample datafile (chopped)

<...>

H;Leverandørnr;Bankkontonr;Leverandørnavn;Organisasjonsnr;Fakturanr;Fakturadato;Forfallsdato;Valuta;Fakturabeløp;Valutakurs;FakturabeløpNOK;KID;Bilagsnr;Scannebatch;Duplikat;Mvabeløp;Nettobeløp;Fakturatype;Val.dok;Selskapskode;Selskap;Refusjon postnr sted;refusjon Land;Deres Ref;Refusjon navn;Refusjon adresse;

H;40013;62190581506;TUR-RETUR AS - NO 870 989 587;870989587;105358;2/19/2015;3/1/2015;NOK;5064;1;5064;103071053583;

80746991;;N;403;4661;1;;FT;GatoFly AS;;;;;;

L;Konto;Kontonavn;Avdeling;Prosjekt;Beløp;Bilagstekst;MVA-kode;MVA beløp;Beløp NOK;Anlegg;Produkt;Salgssted;Kanal;Sats

L;7135;Reisekostnader;4500;1400;220;Nye FT. opphold H.Hernes 26-28.2/1-3.3;0;0;220;;;;;0

L;7135;Reisekostnader;4500;1400;4844;Nye FT. opphold H.Hernes 26-28.2/1-3.3;1D;358.81;4844;;;;;8

L;Fakturahistorikk

C;Bruker;Handling;Dato;Kommentarer

C;BTIP Connector ;Lagret av BTIPC ;2/24/2015 11:12:12 AM ;E-invoice saved by BTIPC

C;brigde ;Kommentar lagt til ;2/24/2015 11:28:04 AM ;Autosirk- referanse blank

C;brigde ;Grunnlagsdata endret ;2/24/2015 11:28:04 AM ;fakturatype-1

C;BTHANDLER ;Kommentar lagt til ;2/24/2015 11:28:04 AM ;matchSupplierAccount. match på konto.40013

C;BTHANDLER ;Kommentar lagt til ;2/24/2015 11:28:04 AM ;Endret flytstatus

C;BTHANDLER ;Kommentar lagt til ;2/24/2015 11:28:04 AM ;setCompName OK.

<...>

As you can see data is semicolon separated, first column is destination identifier, date and number separators are visible as well. Data has some crap text lines, but does not matter now. No external ID's or references are used. Invoice line import lines ("L") can be anywhere in the file, does not matter that now they are between header and comments. First column and some others are marked as FILLER in control file.

HTML to datafile

Will reveal some more cards for you. Data file was an old HTML file, it was missing end-tags, using a couple of self aspired tags, data formatting was also not very handy. Here is full source of my bash script used to prepare the file for reading.

#!/bin/sh

file="$1"

echo processing $file

echo converting to unicode

cat $file | iconv -f utf-16 -t utf-8 > "$file".out

echo done

echo HTML cleanup

less "$file".out | tr ',' '.' | sed 's/ //g' | sed 's/\cM//g' | sed 's/\cW//g' | sed 's/<\/TR>/<\/TR> /g' | sed 's| sed 's/ / \n/g' | sed ':a;N;$!ba;s|\n

sed 's/ / \n/g' > "$file".clean

echo cleanup complete

echo header and lines separation

./filter -t 2 -c 2 -f "$file".clean > "$file".tmp

./filter -t 3 -f "$file".clean > "$file".lines

./filter -t 4 -f "$file".clean > "$file".comments

echo done separating

echo transposing headers

cols=2; for((i=1;i<=$cols;i++)); do awk -F ";" 'BEGIN{ORS=";";} {print $'$i'}' "$file".tmp | tr '\n' ' '; echo; done > "$file".header

echo transposed

echo cleanup

rm "$file".out -rf

rm "$file".clean -rf

rm "$file".tmp -rf

echo cleaned up

echo single file

sed -e 's/^/H;/' "$file".header > "$file".out

sed -e 's/^/L;/' "$file".lines >> "$file".out

sed -e 's/^/C;/' "$file".comments >> "$file".out

echo joined

echo sql loader start

sqlldr schema/******@sid data="$file".out control=loader.ctl discard="$file".discard

echo loaded

Bash script usage is simple:

# script.sh data_file.html

Conversion explanations

Conversion - my html file was encoded in utf16 so first step is to get some readable file instead of binary looking one.

HTML cleanup - examples and more explanations are available in previous post Crawling AjAx part 2. In this case I had to add the missing end-tags, generate data separators, move some new lines forth and back to have a readable file.

Filter - a modified HTML table selection script. Source is also available in Crawling AjAx part 2. This script picks desired table and column data from a formated HTML file.

Transposition - new problem, header table data is vertical, lines and comments - horizontal. Have to separate header and make the data horizontal as well.

Last steps - transposed data is joined back to a working file, each table data gets a distinctive line marker to be used with SQL Loader. Last step - SQL Loader call. You can skip the cleanup step to see the temporary working files if needed.

Contact

Contact me simakas[at]gmail.com for details or original source code if needed.

žymės:

AJAX,

HTML,

Linux,

Oracle,

SQL Loader

Thursday, January 15, 2009

Crawling AJAX in practice. Part 2

Part 1

XULRunner + Crowbar

This is the only working solution I managed to build, I'm sure you'll find description on how to install and prepare XULRunner and Crowbar. Short walk through:

Get XULRunner, SVN checkout fresh Crowbar build:

svn checkout http://simile.mit.edu/repository/crowbar/trunk/

I was installing stuff on RHEL 4.2, so I had some problems with GTK+ libraries, they are shared and can't be updated nor removed. Evolution28 came to help: evolution28-pango, evolution28-glib2, evolution28-cairo.

Untar XULRunner, move Crowbar trunc folder so it would be in the same level with XULRunner:

[root@host _CRAWL]# ll

drwxr-xr-x 5 root root 4096 Nov 12 15:03 trunk

drwxr-xr-x 11 root root 4096 Sep 26 06:44 xulrunner

Change dir to xulrunner and perform installation. This will result an application.ini file which is later used as parameter file.

./xulrunner --install-app ../trunk/xulapp

Now get an X session, VNC is a good solution. Change dir to trunk/xulapp, export parameter variable with required libraries (in case you're stuck with them too), get XULRunner up and running:

export LD_LIBRARY_PATH=/usr/evolution28/lib

cd trunk/xulapp

../../xulrunner/xulrunner application.ini

You will now get two windows: Crowbar and an unnecessary debug console, which can be closed (VNC delete option). Crowbar is now accessed with any browser on port 10000. We will abuse this port in next part.

Getting the page source

We now must have a URL to desired page, sadly though I was unable to find a solution to follow the links using Crowbar. Maybe some other tool should be used to provide links, for example Perl::Mechanize.

Lets say we have a direct link, I was playing around with ubs.com. Using cURL we finally get the page source.

curl -s –data "url=https://wb1.ubs.com/app/ABU/3/QCoreWeb/GRT_3_Aggregation/gvu/pg_mi/?grt_locale=en_US/&delay=3000" http://127.0.0.1:10000

-s - stands for silence;

-data - followed by data;

"url=&delay=1000" - URL link and delay in milliseconds for Crowbar to prepare content, 5-10 seconds is normal;

http://127.0.0.1:10000 - Crowbar proxy listening on port 10000.

cURL sure has GET and POST mechanisms, but I was unable to get a proper link and perform POST on pages that don't have CGI.

The output data is saved to file by adding > ubs.html to the end.

Viewing the data

The cURL output as one problem, its garbaged, you lose most of the newlines from page source, which means we are going to use one more tool. You can try opening page with Firefox, all the data is there, not so nicely layed out, but there. And all we need is data. Using stream editor sed we transform page into a viewable form. A command line that suites my test pages:

cat ubs.html | sed 's|/b>||g' | sed 's/b>/>/g' | sed 's|</|<|g' | sed 's|<|<|g' | sed 's/br&/> -e :a -e '/\/TD>$/N; s/\/TD>\n/\/TD>/; ta' | sed 's/<\/TABLE/\n<\/TABLE/g' > ubs_eol.html

I simply cleanup some unicode characters, split tables and rows to newlines. The page is prepared to be parsed. An example of more advanced filter with more unicode and more tabs and spaces in source file:

less ubs.html | tr ',' '.' | sed 's/\cM/è/g' | sed 's/\cW/æ/g' | sed 's|/b>||g' | sed 's/b>/>/g' | sed 's|</|<|g' | sed 's|<| <|g' | sed 's/br&/> a" -e "P;D" | sed 's//TD>/g' | sed -e :a -e '/\/TD>$/N;s/\/TD>\n/\/TD>/; ta' | sed 's/ \n<\/TABLE/\n<\/TABLE/g' > ubs_eol.html

Next I use custom C filter to get only the data - words and numbers, filter splits the whole page by tables (TABLE and DIV tags), reads everything between closed tag marks ">" and "<". The filter is parameterisised and gives us just table and column we need. An example request:

./filter -d -t 29 -c 3 | tr -d [=\'=] | grep Name -v > OUTPUT.csv

-d - use DIV tags for more accurate table division;

-t - show only table 29;

-c - show column 3 (always prints column #1 plus required one);

The output follows:

DAX,4336.73 DJ

EURO STO,2257.67

DJ Industr ,8048.57

NASDAQ Comb,1465.17

Nikkei 225,8023.31

S&P 500,823.36

SMI,5382.44

Working examples

No comments.

XULRunner + Crowbar

This is the only working solution I managed to build, I'm sure you'll find description on how to install and prepare XULRunner and Crowbar. Short walk through:

Get XULRunner, SVN checkout fresh Crowbar build:

svn checkout http://simile.mit.edu/repository/crowbar/trunk/

I was installing stuff on RHEL 4.2, so I had some problems with GTK+ libraries, they are shared and can't be updated nor removed. Evolution28 came to help: evolution28-pango, evolution28-glib2, evolution28-cairo.

Untar XULRunner, move Crowbar trunc folder so it would be in the same level with XULRunner:

[root@host _CRAWL]# ll

drwxr-xr-x 5 root root 4096 Nov 12 15:03 trunk

drwxr-xr-x 11 root root 4096 Sep 26 06:44 xulrunner

Change dir to xulrunner and perform installation. This will result an application.ini file which is later used as parameter file.

./xulrunner --install-app ../trunk/xulapp

Now get an X session, VNC is a good solution. Change dir to trunk/xulapp, export parameter variable with required libraries (in case you're stuck with them too), get XULRunner up and running:

export LD_LIBRARY_PATH=/usr/evolution28/lib

cd trunk/xulapp

../../xulrunner/xulrunner application.ini

You will now get two windows: Crowbar and an unnecessary debug console, which can be closed (VNC delete option). Crowbar is now accessed with any browser on port 10000. We will abuse this port in next part.

Getting the page source

We now must have a URL to desired page, sadly though I was unable to find a solution to follow the links using Crowbar. Maybe some other tool should be used to provide links, for example Perl::Mechanize.

Lets say we have a direct link, I was playing around with ubs.com. Using cURL we finally get the page source.

curl -s –data "url=https://wb1.ubs.com/app/ABU/3/QCoreWeb/GRT_3_Aggregation/gvu/pg_mi/?grt_locale=en_US/&delay=3000" http://127.0.0.1:10000

-s - stands for silence;

-data - followed by data;

"url=

http://127.0.0.1:10000 - Crowbar proxy listening on port 10000.

cURL sure has GET and POST mechanisms, but I was unable to get a proper link and perform POST on pages that don't have CGI.

The output data is saved to file by adding > ubs.html to the end.

Viewing the data

The cURL output as one problem, its garbaged, you lose most of the newlines from page source, which means we are going to use one more tool. You can try opening page with Firefox, all the data is there, not so nicely layed out, but there. And all we need is data. Using stream editor sed we transform page into a viewable form. A command line that suites my test pages:

cat ubs.html | sed 's|/b>||g' | sed 's/b>/>/g' | sed 's|</|<|g' | sed 's|<|<|g' | sed 's/br&/> -e :a -e '/\/TD>$/N; s/\/TD>\n/\/TD>/; ta' | sed 's/<\/TABLE/\n<\/TABLE/g' > ubs_eol.html

I simply cleanup some unicode characters, split tables and rows to newlines. The page is prepared to be parsed. An example of more advanced filter with more unicode and more tabs and spaces in source file:

less ubs.html | tr ',' '.' | sed 's/\cM/è/g' | sed 's/\cW/æ/g' | sed 's|/b>||g' | sed 's/b>/>/g' | sed 's|</|<|g' | sed 's|<| <|g' | sed 's/br&/> a" -e "P;D" | sed 's//TD>/g' | sed -e :a -e '/\/TD>$/N;s/\/TD>\n/\/TD>/; ta' | sed 's/ \n<\/TABLE/\n<\/TABLE/g' > ubs_eol.html

Next I use custom C filter to get only the data - words and numbers, filter splits the whole page by tables (TABLE and DIV tags), reads everything between closed tag marks ">" and "<". The filter is parameterisised and gives us just table and column we need. An example request:

./filter -d -t 29 -c 3 | tr -d [=\'=] | grep Name -v > OUTPUT.csv

-d - use DIV tags for more accurate table division;

-t - show only table 29;

-c - show column 3 (always prints column #1 plus required one);

The output follows:

DAX,4336.73 DJ

EURO STO,2257.67

DJ Industr ,8048.57

NASDAQ Comb,1465.17

Nikkei 225,8023.31

S&P 500,823.36

SMI,5382.44

Working examples

No comments.

Friday, December 12, 2008

Special characters in shell

If you seeing some special characters using less, then you are in the right place. Bumped into this problem last evening, when i was trying to parse an utf8 coded html. You may write special characters in shell using (ctrl+v) (ctrl+x), where x is your desired character.

If you seeing some special characters using less, then you are in the right place. Bumped into this problem last evening, when i was trying to parse an utf8 coded html. You may write special characters in shell using (ctrl+v) (ctrl+x), where x is your desired character.When you use for example ^M in bash script - its not recognized, even if you edit it vith vi. I found the solution in perl forum.

grep ^D

(type ctrl-V ctrl-D)

perl -ne 'print if /\cD/'

Use \cx notation in shell scripting, where x is your desired character. Example:

less ubs.html | tr ',' '.' | sed 's/\cM/č/g' > ubs_normal.html

žymės:

Linux

Thursday, December 11, 2008

Crawling AJAX in practice. Part 1

Some theory

Traditionally, a web spider system is tasked with connecting to a server, pulling down the HTML document, scanning the document for anchor links to other HTTP URLs and repeating the same process on all of the discovered URLs. Each URL represents a different state of the traditional web site. In an AJAX application, much of the page content isn't contained in the HTML document, but is dynamically inserted by Javascript during page load. Furthermore, anchor links can trigger Javascript events instead of pointing to other documents. The state of the application is defined by the series of Javascript events that were triggered after page load. The result is that the traditional spider is only able to see a small fraction of the site's content and is unable to index any of the application's state information.

Some findings

I've googled around for a few days and have found various information about crawling tools. There are more, but some are forgotten to mention or haven't been tried. Here is a quick summary of tools for getting page source.

1) Right click, View page source. Well, a simple way to get page source, but you fall laughing when you see the dynamic page source. I was playing around with ubs.com -> quotes -> instruments.

2) Perl::Mechanize. It's a useful tool, i was happy using it, but the result is sadly though - static. It fills forms and follows links, but the final page source is static. It digs text, digs .css underneath, but avoids JavaScript. You can get a page source browsing only static version of webpage (which ubs.com has). This tools is useful for sabotage, for example you create disposable email accounts, vote online for a car of the year, read the passkey from you temp email, vote and repeat the loop again. No user interface needed, while testing use browser and

compare it to Mechanize agent->content.

3) Various Firefox extensions. Crap, the target was to build a GUI-less mechanism, these implementations though require user interaction. Extensions are based on tracking AJAX requests, recording user actions (like macros in Office). iMacros, ChickenFoot, Selenium. Here are the names. iMacros are error sensitive, for example first visit and getting a cookie differs from the subsequent visits. Mechanize for example is error proof for such actions. I haven't found use of other extensions, because most of them doesn't work on Minefield, nor RedHat's Firefox one point zero something.

4) Ruby + Watir. AJAX crawling after all! But there is a huge "but" - presenting IE. There are also Watir implementations on FF and Safari, haven't tested them. Ruby is also error proof, and i have achieved some progress, but the result is STATIC. Spent half a day looking for a way to save page content to file, spent one day more for looking how to get a full page source, but didn't manage that. Please inform me if i'm wrong, but anyways - skype has an emotion for coding on windows: (puke).

5) Yes you are right, saved the best for dessert. XULRunner + Crowbar. This stuff works and rocks and has an implementation, more about it in Part 2. Here is a quote: "...a server-side headless mozilla-based browser". It even sounds promising. It runs as a daemon, you can ask it, push it, get contents, and get AJAXed source. Its a browser based execution environment with a scraping tool on it. After you get your desired page source, its kinda trash: no newlines, bunch of HTML tags. Then there are few solutions - pass it to Lynx or process manually with a custom C code parser. At last we have the numbers, not a JavaScript function names.

Clarification

The purpose of this task is not to exactly crawl from one page to other, its only numbers that are pushed (AJAX'ed) via JavaScript that matters.

Part 2

Traditionally, a web spider system is tasked with connecting to a server, pulling down the HTML document, scanning the document for anchor links to other HTTP URLs and repeating the same process on all of the discovered URLs. Each URL represents a different state of the traditional web site. In an AJAX application, much of the page content isn't contained in the HTML document, but is dynamically inserted by Javascript during page load. Furthermore, anchor links can trigger Javascript events instead of pointing to other documents. The state of the application is defined by the series of Javascript events that were triggered after page load. The result is that the traditional spider is only able to see a small fraction of the site's content and is unable to index any of the application's state information.

Some findings

I've googled around for a few days and have found various information about crawling tools. There are more, but some are forgotten to mention or haven't been tried. Here is a quick summary of tools for getting page source.

1) Right click, View page source. Well, a simple way to get page source, but you fall laughing when you see the dynamic page source. I was playing around with ubs.com -> quotes -> instruments.

2) Perl::Mechanize. It's a useful tool, i was happy using it, but the result is sadly though - static. It fills forms and follows links, but the final page source is static. It digs text, digs .css underneath, but avoids JavaScript. You can get a page source browsing only static version of webpage (which ubs.com has). This tools is useful for sabotage, for example you create disposable email accounts, vote online for a car of the year, read the passkey from you temp email, vote and repeat the loop again. No user interface needed, while testing use browser and

compare it to Mechanize agent->content.

3) Various Firefox extensions. Crap, the target was to build a GUI-less mechanism, these implementations though require user interaction. Extensions are based on tracking AJAX requests, recording user actions (like macros in Office). iMacros, ChickenFoot, Selenium. Here are the names. iMacros are error sensitive, for example first visit and getting a cookie differs from the subsequent visits. Mechanize for example is error proof for such actions. I haven't found use of other extensions, because most of them doesn't work on Minefield, nor RedHat's Firefox one point zero something.

4) Ruby + Watir. AJAX crawling after all! But there is a huge "but" - presenting IE. There are also Watir implementations on FF and Safari, haven't tested them. Ruby is also error proof, and i have achieved some progress, but the result is STATIC. Spent half a day looking for a way to save page content to file, spent one day more for looking how to get a full page source, but didn't manage that. Please inform me if i'm wrong, but anyways - skype has an emotion for coding on windows: (puke).

5) Yes you are right, saved the best for dessert. XULRunner + Crowbar. This stuff works and rocks and has an implementation, more about it in Part 2. Here is a quote: "...a server-side headless mozilla-based browser". It even sounds promising. It runs as a daemon, you can ask it, push it, get contents, and get AJAXed source. Its a browser based execution environment with a scraping tool on it. After you get your desired page source, its kinda trash: no newlines, bunch of HTML tags. Then there are few solutions - pass it to Lynx or process manually with a custom C code parser. At last we have the numbers, not a JavaScript function names.

Clarification

The purpose of this task is not to exactly crawl from one page to other, its only numbers that are pushed (AJAX'ed) via JavaScript that matters.

Part 2

Friday, December 5, 2008

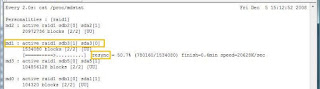

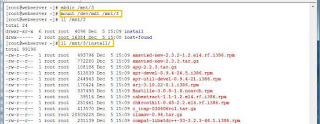

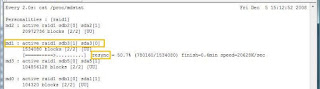

MD create, overwrite question

We had an argue today with my friend DeepM,

does making software raid1 with mdadm overwrites the partition or at least garbages its superblock / partition table / file system, if someone bumps to this question one day in ones life, here is the answer: NO, it does not.

I took two sata partitions sda3 and sdb3, mkfs.ext3, copy some stuff to both of them.

In picture 1, you can see the contents.

Next i create md device using both partitions with command:

mdadm -Cv /dev/md1 -l1 -n2 /dev/sda3 /dev/sdb3

Mdadm kindly warns me, that partition, contains

file system with (probably) files in it. Thank you, i know what i'm doing. Just press "y".

Resync process starts, 1.5G takes a few seconds to resync.

Picture 3 below: resync in progress.

After process is done, i remount newly created md1 device, check its contents and VOILA - everything is in place.

md1 device, check its contents and VOILA - everything is in place.

does making software raid1 with mdadm overwrites the partition or at least garbages its superblock / partition table / file system, if someone bumps to this question one day in ones life, here is the answer: NO, it does not.

I took two sata partitions sda3 and sdb3, mkfs.ext3, copy some stuff to both of them.

In picture 1, you can see the contents.

Next i create md device using both partitions with command:

mdadm -Cv /dev/md1 -l1 -n2 /dev/sda3 /dev/sdb3

Mdadm kindly warns me, that partition, contains

file system with (probably) files in it. Thank you, i know what i'm doing. Just press "y".

Resync process starts, 1.5G takes a few seconds to resync.

Picture 3 below: resync in progress.

After process is done, i remount newly created

md1 device, check its contents and VOILA - everything is in place.

md1 device, check its contents and VOILA - everything is in place.

žymės:

Linux

Friday, October 12, 2007

Daily geekshow

Two things for today.

Two things for today.Number one: there is a girl. She works at the stake house i'm having lunch almost every day. After a few times, she remembered me and now, when i come in, she dissapears in the kichen, and comes back with my order. They are having different menu each day for lunch. I don't have to order anymore. It's a good feeling. But now, i'm very interested what she is thinking inside. It's not, that i'm interested in her, i'm just curious. It would be cool that she had The Sims mode or something :) but that's a very very very naughty geeky thought i have. She is a smart girl. But she never smiles. What is she thinking? Leave a message if you are one of those girls.

Number two: just finished Linux/Oracle server crash/recovery simulation. The whole process took 40 minutes, and server is up and running. Wohoo. I'm proud of myself. It's not one of those microsoft days.

Number three: yes, i said there are just two things for today, but i just got reservation at barber. Finally i'll get a haircut. Was waiting for this glory moment for about three months.

Subscribe to:

Posts (Atom)